Disinformation is one of the key challenges of our times. Sometimes it may seem that it is everywhere around us. From the family gatherings where heated discussions on politics, society and even personal health choices take place, to internet, social media and even international politics.

It is not just individuals on the internet who are creating and spreading disinformation now and then. Foreign states, particularly Russia and China, have systematically used disinformation and information manipulation to sow division within our societies and to undermine our democracies, by eroding trust in the rule of law, elected institutions, democratic values and media. Disinformation as part of foreign information manipulation and interference poses a security threat affecting the safety of the European Union and its Member States.

What is disinformation exactly? How can we avoid falling for it, if at all? How can we respond to it? The Learn platform aims to help you find answers to these and other topical questions based on EUvsDisinfo’s collective experience gained since its creation in 2015. Here, you will find some of our best texts and a selection of useful tools, games, podcasts and other resources to build or strengthen your resilience to disinformation. Learn to discern with EUvsDisinfo, #DontBeDeceived and become more resilient.

Define

Fake news

Inaccurate, sensationalist, misleading information. The term “fake news” has strong political connotations and is woefully inaccurate to describe the complexity of the issues at stake. Hence, at EUvsDisinfo we prefer more precise definitions of the phenomenon (e.g. disinformation, information manipulation).

Propaganda

Content disseminated to further one's cause or to damage an opposing cause, often using unethical persuasion techniques. This is a catch-all term with strong historical connotations, thus we rarely use it in our work. Notably, the International Covenant on Civil and Political Rights, adopted by the UN in 1966 states that propaganda for war shall be prohibited by law.

Misinformation

False or misleading content shared without intent to cause harm. However, its effects can still be harmful, e.g. when people share false information with friends and family in good faith.

Disinformation

False or misleading content that is created, presented and disseminated with an intention to deceive or secure economic or political gain and which may cause public harm. Disinformation does not include errors, satire and parody, or clearly identified partisan news and commentary.

Information influence operation

Coordinated efforts by domestic or foreign actors to influence a target audience using a range of deceptive means, including suppressing independent information sources in combination with disinformation.

Foreign information manipulation and interference (FIMI)

A pattern of behaviour in the information domain that threatens values, procedures and political processes. Such activity is manipulative (though usually not illegal), conducted in an intentional and coordinated manner, often in relation to other hybrid activities. It can be pursued by state or non-state actors and their proxies.

Understand

What is Disinformation?

And why should you care?

Some would say that disinformation, or lying, is a part of human interaction. White lies, blatant lies, falsifications, “alternative facts”; propaganda has followed humankind throughout our history. Even the snake in the garden of Eden lied to Adam and Eve!

Others would add that disinformation, especially used for political or geopolitical purposes, is a much more recent invention that became widely used by the totalitarian regimes of the 20th century. And that it was perfected by the KGB - the Soviet Union’s main security agency - which developed so-called “active measures”[1] to sow division and confusion in attempts to undermine the West. And that disinformation continues to be used by Russia for the same purpose to this day. (You can learn more about how Russia has revitalised KGB disinformation methods in our 2019 interview with independent Russian journalist Roman Dobrokhotov.)

There are many ways to answer the question of what disinformation is, and at EUvsDisinfo we have considered its philosophical, technological, political, sociological and communications aspects. We have tried to cover them all in this LEARN section.

Our own story began in 2015, after the European Council, the highest level of decision-making in the European Union, called out Russia as a source of disinformation, and tasked us with challenging Russia’s ongoing disinformation campaigns. Read our story here. In 2014 – the year before EUvsDisinfo was set up – a European country had, for the first time since World War II, used military force to attack and take land from a neighbour: Russia illegally annexed the Ukrainian peninsula of Crimea. Russia’s aggression in Ukraine was accompanied by an overwhelming disinformation campaign, culminating in an all-out invasion and large-scale genocidal violence against Ukraine. Countering Russian disinformation means fighting Russian aggression – as told by Ukrainian fact-checkers Vox Check, who talked to us quite literally from the battlefield trenches where they continue to defend Ukraine.

It is hard to overstate the role of Russian state-controlled media and the wider pro-Kremlin disinformation ecosystem in mobilising domestic support for the invasion of Ukraine.[2] The Kremlin’s grip on the information space in Russia is also an illustration of how authoritarian regimes use state-controlled media as a Tribune, platform to disseminate instructions to their subjects on how to act and what to think, demanding unconditional loyalty from the audience. This stands in sharp contrast to the understanding of media as a forum where a free exchange of views and ideas takes place; where debates, scrutiny and criticism create public discourse that sustains democracies. (We explore these concepts in our text on propaganda and disempowerment.)

Just like the use of media as a Tribune, pro-Kremlin disinformation is supported by a megaphone – the megaphone of manipulative tactics. The use of bots, trolls, fake websites and fake experts and many more activities trying to distort the genuine discussions we need for a democratic debate, is designed to reach as many people as possible to make them feel uncertain, afraid and to instil hatred in them. This shows that it is not a matter of free speech. The right to say false or misleading things is protected in our societies. This, however, is a matter of the Kremlin using all this manipulation as a way to be louder than everyone else. Such information manipulation and interference, including disinformation, is what EUvsDisinfo wants to expose, explain and counter.

Disinformation and other information manipulation efforts, which we also cover in LEARN, attempt to poison such public discourse. Thus, countering disinformation also means defending democracy and standing up against authoritarianism.

Scroll through this section and make sure to check the others, to learn more about the Narratives and Rhetoric of pro-Kremlin disinformation; Disinformation Tactics, Techniques and Procedures; the Pro-Kremlin Media Ecosystem; and Philosophy and Disinformation. Check out the Respond section to learn what you can do it about it. And if you are still curious – we have something special for you too!

[1] The New York Times made an excellent documentary on this back in 2018, called “Operation InfeKtion”, (available in English).

[2] It is for this reason that the EU has sanctioned several dozen Russian propagandists and suspended the broadcasting of Russia state-controlled outlets such as RT on the territory of the EU.

Narratives and Rhetoric of Disinformation

The Narratives section will introduce the key narratives repeatedly pushed by pro-Kremlin disinformation and the cheap rhetorical tricks that the Kremlin uses to gain the upper hand in the information space. This section also discusses the lure of conspiracy theories and finally uncovers the dangers of hate speech. We will explain all pro-Kremlin tools and tricks used to erode trust; discourage, confuse and disempower citizens; attack democratic values, institutions and countries; and incite hate and violence.

The Key Narratives in Pro-Kremlin Disinformation

A narrative is an overall message communicated through texts, images, metaphors, and other means. Narratives help relay a message by creating suspense and making information attractive. Pro-Kremlin narratives are harmful and form a part of information manipulation. They are designed to foster distrust and a feeling of disempowerment, and thus increase polarisation and social fragmentation. Ultimately, these narratives are intended to undermine trust in democratic institutions and liberal democracy itself as a form of governing.

We have identified six major repetitive narratives that pro-Kremlin disinformation outlets use in order to undermine democracy and democratic institutions, in particular in “the West”.

These narratives are: 1) The Elites vs. People; 2) The 'Threatened Values'; 3) Lost Sovereignty; 4) The Imminent Collapse; 5) Hahaganda; and 6. Unfounded accusations of Nazism.

Rhetorical Devices as Kremlin Cheap Tricks

The Kremlin's cheap tricks are a series of rhetorical devices used to, among other purposes, deflect criticism, discourage debate, and discredit any opponents.

These rhetorical devices are designed to occupy the information space, create an element of uncertainty, and to exhaust any opposition. They are often used in combination with each other to create a more effective disinformation campaign.

The rhetorical devices that the pro-Kremlin outlets and on-line trolls alike use include the straw man, whataboutism, attack, mockery, provocation, exhaust, and denial.

For example, the straw man is a rhetorical device where the troll attacks views or ideas never expressed by the opponent. The Kremlin also frequently uses attack as a cheap trick to discourage the opposition from continuing the conversation. Sarcasm, mockery, and ridicule are also common Kremlin tactics to gain advantage in a debate. Finally, the Kremlin often uses denial to discredit opponents and dismiss any evidence that raises questions about Russian accountability.

The Lure of Conspiracy Theories for Authoritarian Leaders

Conspiracy theories are not only a potent element for creating an enticing plot in thrillers, but also for propaganda purposes. One of the many conspiracy theories that has made its way on the Russian TV is the Shadow Government conspiracy theory. It is based on the belief that there is a small group of people, hiding from us, controlling the world.

From the propaganda perspective, the charm of the Shadow Government theory is that it can be filled with anything you want. Catholics, bankers, Jews, feminists, freemasons, “Big Pharma”, Muslims, the gay lobby, bureaucrats - all depending on your target audience.

The goal of the Shadow Government narrative is to question the legitimacy of democracy and our institutions. What is the point of voting if the Shadow Government already rules the world? What is the point of being elected if the Deep State resists all attempts to reform? We, as voters, citizens and human beings, are disempowered through the Shadow Government narrative. Ultimately, the narrative is designed to make us give up voting or practicing our right to express our views.

Hate Speech Is Dangerous

Hate speech is any kind of communication in speech, writing or behaviour that attacks or uses pejorative or discriminatory language with reference to a person or group on the basis of who they are. In other words, based on their religion, ethnicity or affiliation.

Hate speech is dangerous as it can lead to wide-scale human rights violations, as we have witnessed most recently in Ukraine. It can also be used to dehumanise an opponent, making them seem less than human and therefore not worthy of the same rights and treatment.

Russian leaders and media have been increasingly using genocide-inciting hate speech against Ukraine and its people since the annexation of Crimea in 2014 and with increasing intensity before the full-scale invasion in February 2022. By portraying the legitimate government in Kyiv and the wider Ukrainian population as sub-human, both the general Russian population and Russian soldiers alike are able to justify atrocities against them.

Tactics, Techniques and Procedures of Disinformation

For years, work against disinformation used to revolve around a few central questions: is a piece of information true or false? If it is false, is it accidentally or intentionally so? If it is intentionally false or misleading, what is the purpose of its creator or amplifier? Let’s call this is a content-based approach to the problem – a way of monitoring, detecting, and analysing disinformation that is largely focused on the content.

While content is and will remain an integral part of all information manipulation operations, focusing just on that most visible part does not give us a full picture. This is why we have been pivoting to an approach that also includes the analysis of behaviour in the information space. Central to the approach of detecting, analysing, and understanding foreign information manipulation and interference, including disinformation (FIMI), is an ever-evolving set of tactics, techniques and procedures (TTPs).

The logic of TTPs explained

Using TTPs to identify and analyse patterns of manipulative behaviour is far from new. Confronted with similar challenges, the cyber- and information security community developed the Att&ck framework back in 2013. It addressed a complex challenge by providing a structure for organising adversary TTPs that allows analysts to categorise adversary behaviours and communicate them in a way that is easily understandable and actionable by defenders.

Based on the Att&ck framework, the cross-Atlantic DISARM Foundation (in collaboration with Cognitive Security Collaborative, Alliance 4 Europe, and many others) set up a similar framework for information manipulation – the DISARM Framework. It is a free and open resource for the global counter-disinformation community. It is not the only one out there, but it is currently one of the most advanced of its kind. In the simplest terms, it provides a single, standard language for describing information manipulation tactics, techniques, and procedures.

Examples of TTPs

The DISARM Framework organises all the TTPs that are known to be used in information manipulation operations – currently standing at about 250 – into an easily comprehensible system. The framework spans across 12 tactical steps of an information operation from planning the strategy and objectives to developing narratives and content and delivering a final assessment.

The TTPs mapped in the framework cover everything from well-known techniques (e.g. creating fake accounts, building bot networks, amplifying conspiracy narratives, using fake experts etc.) to less often talked about ones (e.g. exploiting data voids, utilising butterfly attacks, spamouflaging etc.). The list of TTPs in the framework is far from final as malign actors keep innovating and the threat landscape keeps evolving. Thanks to the fact that the DISARM Framework is an open and joint effort, it is easily modifiable to keep up with the latest insights and trends in information manipulation.

Needless to say, not all information operations include all the phases laid out in the framework, let alone the 250 or so TTPs listed. The idea of the framework is to map out and present a complete picture that we can then use to analyse and systematise an information manipulation operation.

Analysing the behaviour of malign actors by no means implies that the content of information manipulation operations loses its relevance. Quite the contrary – looking at both the behaviour of malign actors and the content used in their operations gives us a much better understanding of the overall threat landscape. Furthermore, approaching the problem equipped with an organised set of TTPs makes information manipulation – an infamously elusive concept – much more measurable. The additional benefit of a common language of clearly defined TTPs is that the work of analysts worldwide becomes more comparable and interoperable.

Addressing information manipulation as a behavioural problem enables us to come up with responses that are targeted, scalable, more objective, and go beyond awareness raising and the debunking and prebunking of misleading or false narratives. A malign actor who wishes to manipulate the information environment needs to follow certain TTPs that we can now understand, detect, and make more costly even before reactive responses become necessary.

Pro-Kremlin Media Ecosystem

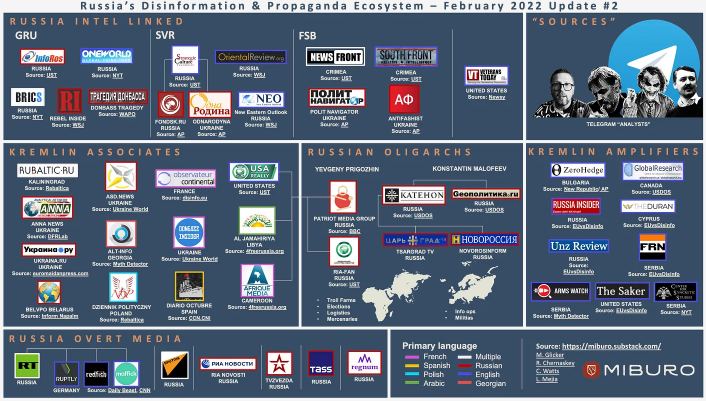

Russia’s attempts to disinform and manipulate in the information space are a global operation. It is an ecosystem of state-funded global messaging where regime representatives speak in unison with the media, organisations, offline and online proxies, and even the Orthodox church. It is an elaborate system using a wide array of techniques, tactics, and procedures, and speaking in dozens of languages – all with the aim of sowing discord, manipulating audiences, and undermining democracy.

EUvsDisinfo has been tracking Russia’s disinformation for years. We have gotten better at detecting and responding to the manipulation attempts. There is now a robust body of evidence of disinformation and manipulation. Nevertheless, Russia keeps attempting to manipulate and sow chaos, and other actors follow suit or, as in the case of China, develop their own playbook of information manipulation and interference, including disinformation.

The ecosystem consists of five main pillars:

- official government communications;

- state-funded global messaging;

- the cultivation of proxy sources;

- the weaponisation of social media;

- and cyber-enabled information manipulation.

The ecosystem reflects both the sources of information manipulation and disinformation and the tactics that these channels use.

Source: Pillars of Russia's Disinformation and Propaganda Ecosystem, GEC

RT and Sputnik

The main instruments bringing the Kremlin’s disinformation to audiences outside of Russia are RT (available in over 100 countries plus online) and Sputnik (a ‘news’ website in over 30 languages). Both outlets are state-funded and state-directed. With an annual budget of hundreds of millions of dollars, RT and Sputnik’s basic role is to spread disinformation and propaganda narratives via their own channels, websites, and multiple social media accounts (now blocked in the EU due to sanctions connected to Russia’s aggression against Ukraine).

RT and Sputnik also interact with other pillars of the ecosystem. They amplify content from Kremlin and Kremlin-aligned proxy sites, exploit social media to reach as many audiences as possible, and promote cyber-enabled disinformation.

Both outlets attempt to equate themselves with major independent and professional international media outlets. They have been trying to increase both their reach and credibility that way. This is also why they portray any criticism towards them as either Russophobia or as violations of media freedom. The same goes for numerous cases of penalties and EU sanctions which RT tried to fight in the European Court of Justice, but failed.

Moreover, these outlets do not have – and do not seek – any editorial independence, and are instructed what to report on and how by the Kremlin.

In reality, RT and Sputnik’s organisational set-ups and goals are fundamentally different from independent media. RT was included in an official list of core organisations of strategic importance for Russia. RT’s own editor-in-chief, Margarita Simonyan, defines the mission of the outlet in military terms, publicly equating the need for RT with the need for a Defence Ministry. Simonyan also made clear that RT’s mission is to serve the Russian state as an ‘information weapon’ in times of conflict.

Spreading its tentacles

The Kremlin’s tentacles in the information space go way beyond RT and Sputnik. RT is affiliated with Rossiya Segodnya through Margarita Simonyan, the editor-in-chief of both RT and Rossiya Segodnya. Moreover, RT’s parent company, TV-Novosti, was founded by RIA Novosti, and RIA Novosti’s founder’s rights were transferred to Rossiya Segodnya via a presidential executive order in 2013. By the way, the head of Rossiya Segodnya, Dmitry Kiselyov, was sanctioned by the EU back in 2014 for his role as a ‘central figure of the government propaganda supporting the deployment of Russian forces in Ukraine’.

Public records show that some employees work for both RT and Rossiya Segodnya despite the two organisations claiming to be separate. In some cases, staff working for Rossiya Segodnya have worked for other Kremlin-affiliated outlets at the same time.

This is only the tip of the iceberg. There is a well-documented relationship between RT and other pillars in the Russian disinformation ecosystem – a collection of official, proxy, and unattributed communication channels and platforms that Russia uses to create and amplify narratives. These include, among others:

- audiovisual services such as Ruptly (subsidiary of RT);

- Redfish, a media collective;

- Maffick Media, an outlet hosting different TV shows (owned by Ruptly);

- ICYMI and In The Now, social media TV channels;

- an array of websites, some of them connected to Russian intelligence services, such as News Front, South Front, InfoRos, and the Strategic Culture Foundation;

- the Internet Research Agency, a troll farm linked to Yevgeniy Prigozhin, who is also behind Russia’s mercenary organisation, the Wagner Group;

- hundreds of inauthentic accounts and pages on different social media platforms (examples from Facebook and Instagram, Twitter: here and here);

- Russian TV channels, also available via satellite in different parts of the world;

… and many, many, many more.

A special place on this list is reserved for outlets connected and directed by the Belarusian regime, who now act in coordination with the Russian ecosystem (examples here, here and here).

Image from Clint Watts

Russia’s ecosystem of disinformation and information manipulation is about shouting disinformation and propaganda from the rooftops and spreading disinformation as widely as possible using different tactics, techniques, and procedures.

Disinformation and Philosophy

Other parts of ‘Understand’ focus on narratives, techniques, actors and technological aspects of foreign information manipulation and interference, including disinformation.

However, approaching the matter only from a technological angle ignores that disinformation is an idea. It also ignores that, when practiced, disinformation uses other ideas and is often based on old concepts and metaphors.

Therefore, in our 2021 series called “Disinformation and Philosophy” we explored the historical evolution of disinformation as an idea.

Broadly speaking, an idea is a thought, concept, sensation, or image that is or could be present in the mind. We asked what the greatest thinkers in the history of philosophy would make of disinformation.